Generative User Interfaces

Building tools for design on the fly from text prompts

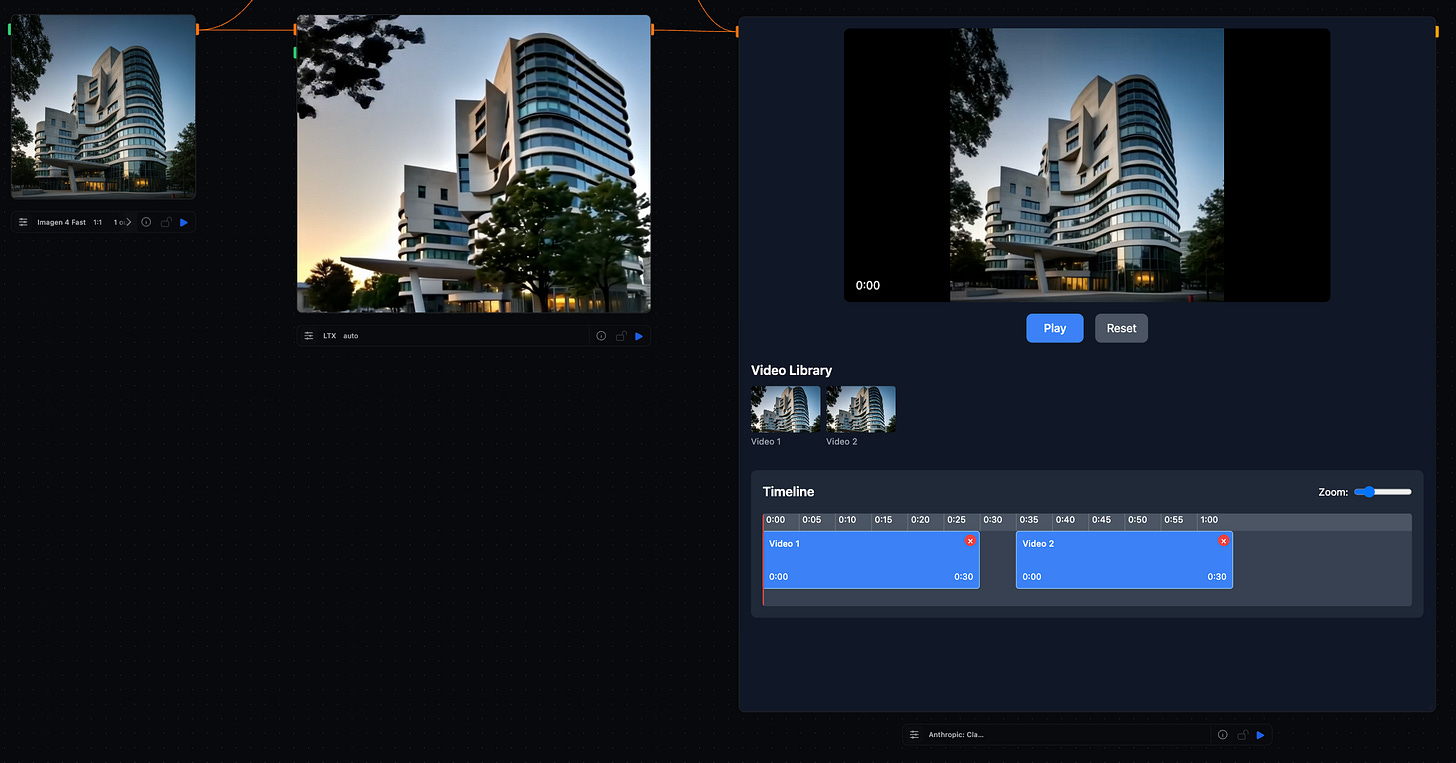

A user-generated video editing node in Runchat

I would really like people to use Runchat to not only create and automate workflows, but also build their own design tools. This includes tools for compositing images and videos, drawing and painting masks or laying out images and text that all require manual input and aren’t really intended to be (entirely) procedurally automated. If Runchat doesn’t currently do what someone wants, ideally they could simply ask for a new user interface that performs that task and the just carry on working. I want people to be able to change Runchat while they use it.

Nodes in Runchat can be thought of as isolated micro-applications. They take some input from the user or from elsewhere in the workflow and do something with it to perform some task. Nodes are an ideal candidate for generative user interfaces because we can keep individual nodes relatively simple and instead build complexity by chaining multiple nodes together. Generated nodes tend to be useful very quickly because they provide solutions to gaps in specific functionality. For instance, if you need a way to overlay one image on top of another for a product placement mock up you could generate a UI for doing this specific task, but leave the actual image generation, image upload or post-processing to other nodes in the workflow. This is the opposite of vibe-coding entire applications from scratch.

Ephemeral Interfaces

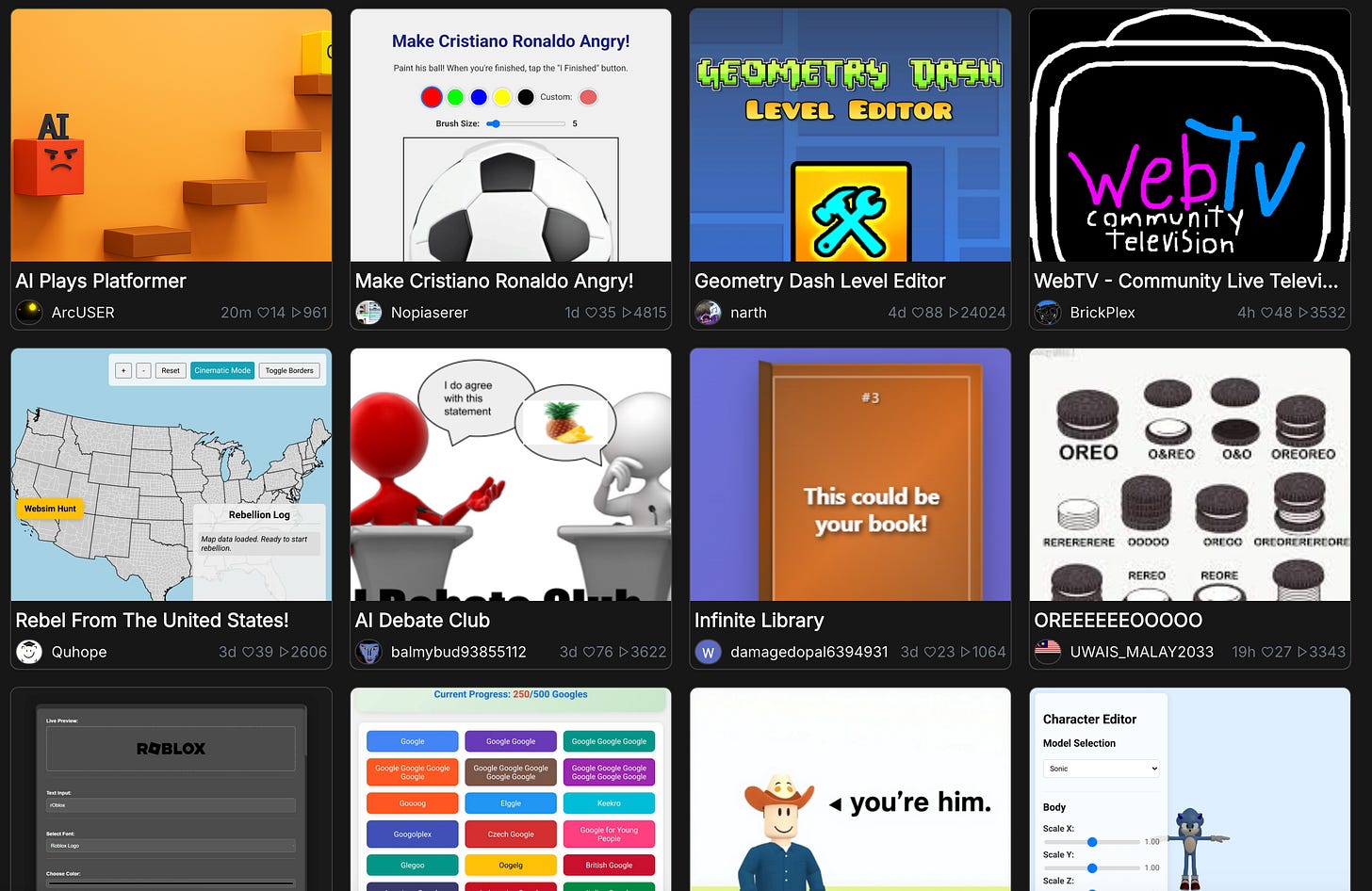

There has been a lot of ruminating over the past few years about the idea of software that generates its own user interface on the fly. One manifestation of this idea is websim, a tool for generating HTML sites that can contain links to other entirely fictional sites. When a user clicks on one of these links the page content (including UI) is generated on the fly instead of loaded from pre-existing HTML. What originally began as a joke during a hackathon has become something that is used by a lot of people for both entertainment and very rough software prototyping. The home page of websim.ai is part random websites and part random apps, all generated from the whims of users.

Above: Websim (2025). Below: Geocities (1999ish)

Geocities 30 years on

Digging around in websim feels like early Geocities internet. There is no visual consistency to anything, and comically atrocious pixelated images and jarring colour schemes abound. It also feels like a great place to pick up malware. You can’t really trust what any app does, and deception is almost part of the aesthetic. Some apps have links to “Free Robux” that speaks to the creator and target demographics. And this gets to the root of user-generated interfaces. It is an exciting and utopian idea to build software applications that allow their users to be maximally creative and bend the software itself to suit their needs. This is why kids are using websim. But this also makes it difficult or impossible to create a trustworthy brand and opens up all sorts of opportunities for sketchy behaviour when this user-generated content is shared on the internet and used by others.

Sandboxing Generated Code

In a blog article called “How to build a plugin system on the web and also sleep well at night”, Rudi Chen describes how Figma went about finding ways to allow developers to run arbitrary code within their app without compromising on a long list of essential product and security concerns. There are several primary risks to running user generated code within an existing software application:

User generated code can potentially interact with the parent application and access sensitive user data

User generated code can potentially interact with the server to again, access sensitive user data or change app behaviour

User generated code can “phone home” and send information about the user or current state of the application to malicious actors

User generated code can spoof legitimate UI and phish users for passwords and other information

Etc.

This is why Rudi was loosing sleep: the risks of something going wrong by allowing people to run arbitrary javascript in your app are quite high. There are several approaches to mitigating these risks and they are covered in the Figma article:

Run user generated code in an iFrame

Compile user generated code to web assembly

Use javascript proxy objects

Runchat already uses QuickJS (effectively [2]) to sandbox user generated code that runs on our server. But to allow users to build interactive UIs in Runchat, we need it to run in the browser. While Figma ended up moving on from the iFrame approach because of the need to frequently interact with a large parent document, Runchat doesn’t have this problem. We only need users to interact with the context of a node. Specifically, our user generated code only needs to be able to read the node inputs, and set the node outputs. Runchat also already serialises all communication between nodes to strings as nodes need to be able to run on the server and transmit data back to the browser client. Consequently the iFrame postMessage api is a perfect fit for our needs and provides a (relatively) secure way for sketchy user generated code to interact with our parent application.

All we need to do is inject a user’s javascript code into an iframe that doesn’t have the same-origin attribute as the parent and then render it on our canvas and we have a fairly secure sandbox environment. This doesn’t prevent the user generated code having malicious intent, but this can be handled with an approvals flow before custom nodes are loaded in a workflow.

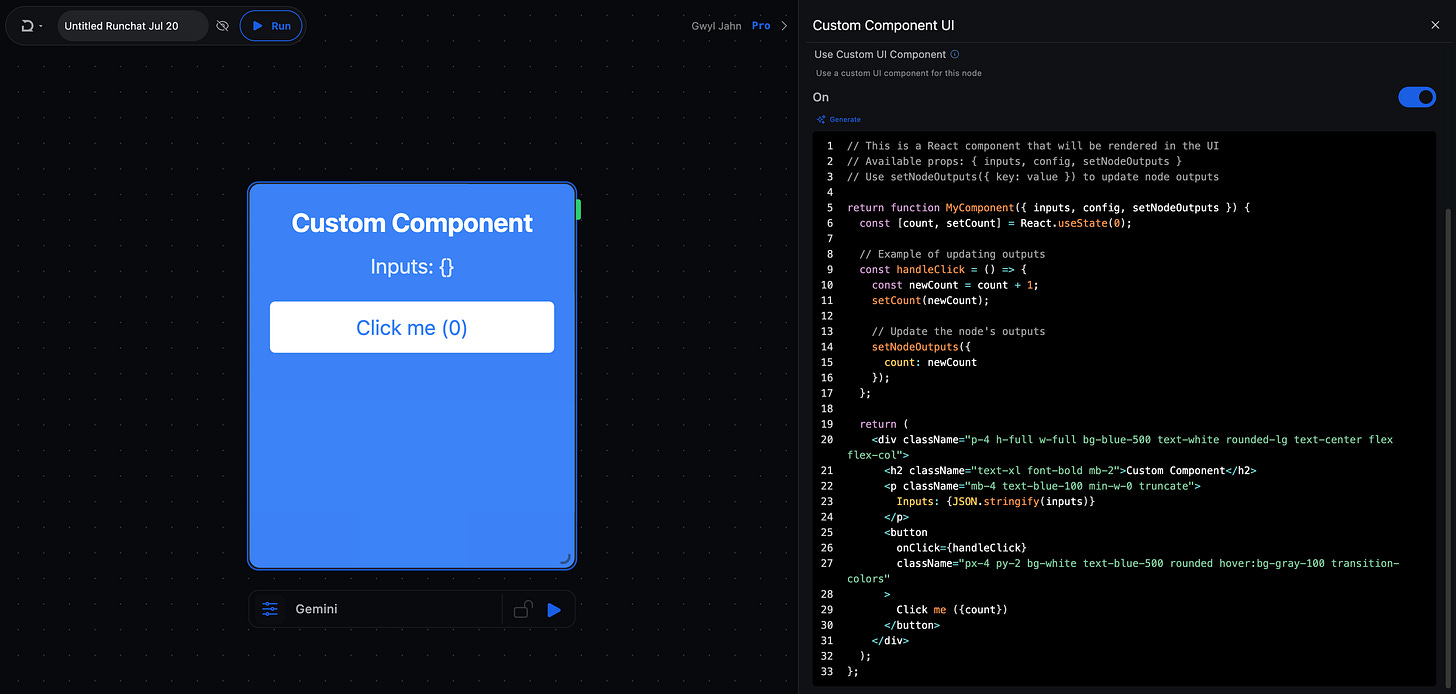

A template for creating nodes using React components

Generating React Components

The goal of our generative UI is to make it as effortless and fast as possible for someone to fill in a gap in Runchat’s functionality and stay in creative flow. This means that it isn’t a great idea to force users to learn our API and then write custom components from scratch injavascript - no one would do this. Instead we want to lean heavily on language models to write all of our code, with users able to make changes by prompting the language model and seeing the result update immediately on the canvas. For more experienced developers it should be possible to make changes to the code directly, but we would hope that the requirement for this is kept to a minimum.

In the earlier article on visual vibe coding, I talked about generating interactive node outputs in HTML and javascript. We could have opted for this approach with custom nodes, with users essentially writing whatever javascript they want and using HTML to render it. But this would mean that there would be lots of different patterns and approaches to generating stateful UI, and most code generated by language models would likely try to write React components. It makes sense to lean into this bias for React, and to encourage language models to think about generating a single UI element (a node) by enforcing returning a single React component as our generative UI.

This pattern also makes it relatively easy to prompt language models about the bounds of the code they can generate. We can ask the code to always return exactly a functional react component with exact props that we inject in to our iFrame. We can define the constraints of the sandbox (e.g. no import statements) and our styling system (tailwind). Then within these constraints and within this React component, the generated code can do (almost) whatever it likes.

Our “hello world” example is a stateful node that outputs a counter. Every time a user clicks a button it increments by one. Any changes to the code are immediately re-rendered on the node in the canvas, providing visual feedback on design changes and live debugging. Edits can be made by changing the code directly in the editor, or adding comments and then re-generating the entire component using a language model.

Publishing and Use Cases

Users can run their own nodes immediately on the canvas. They can also be “published” so that they can be added to libraries and re-used in other workflows. Now that we have this in place, we can start building out a library of design tools that are missing from Runchat, and sharing these tools with the rest of the community.

Image Composition

There are a bunch of new image editing models like Flux Kontext or Gemini’s native image generation that are great at placing one image into another. However, these models are fairly unreliable at present and without finetuning produce inconsistent results. It is much more powerful to give designers the ability to roughly setup the composition of their image and then using these same image editing models to polish it so that it looks as realistic as possible.

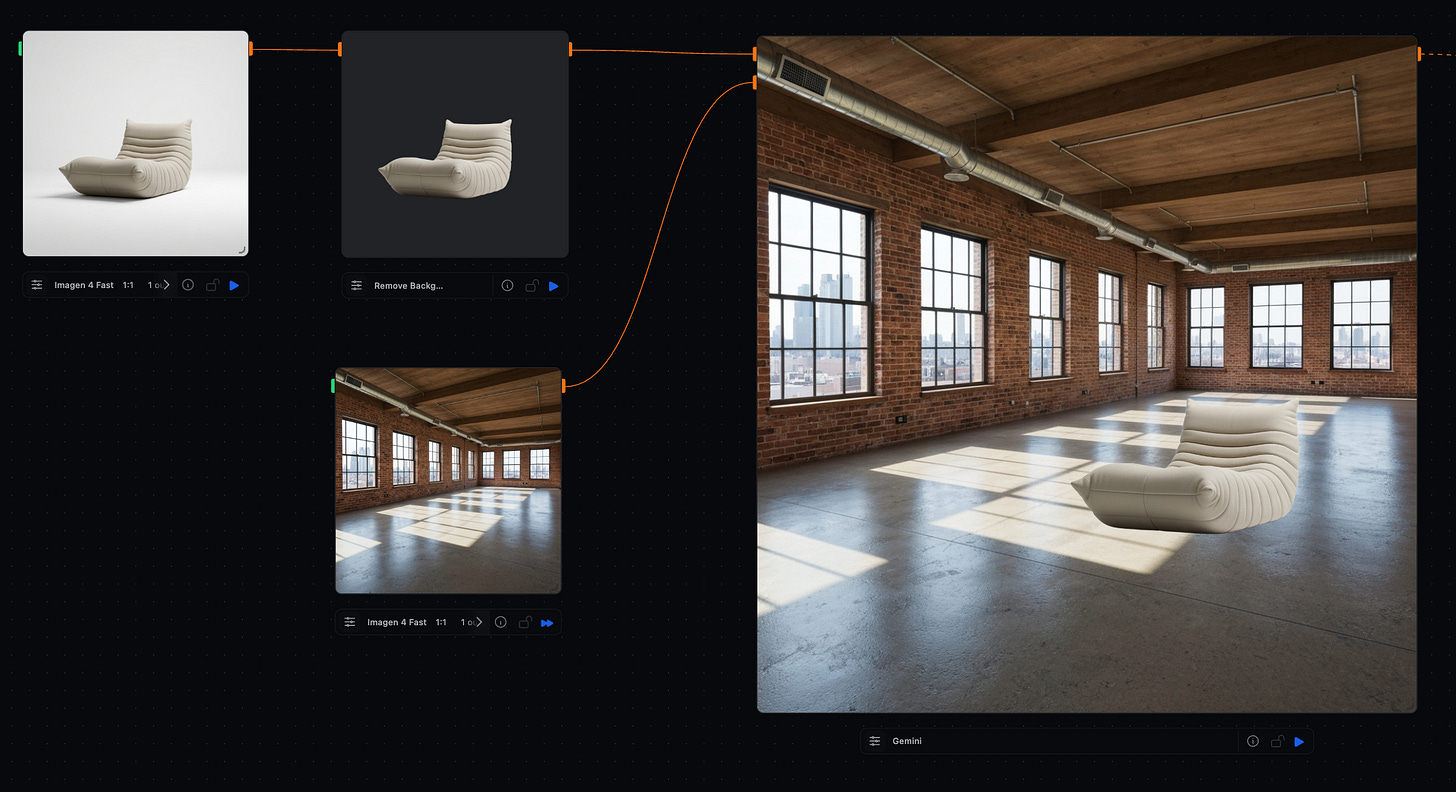

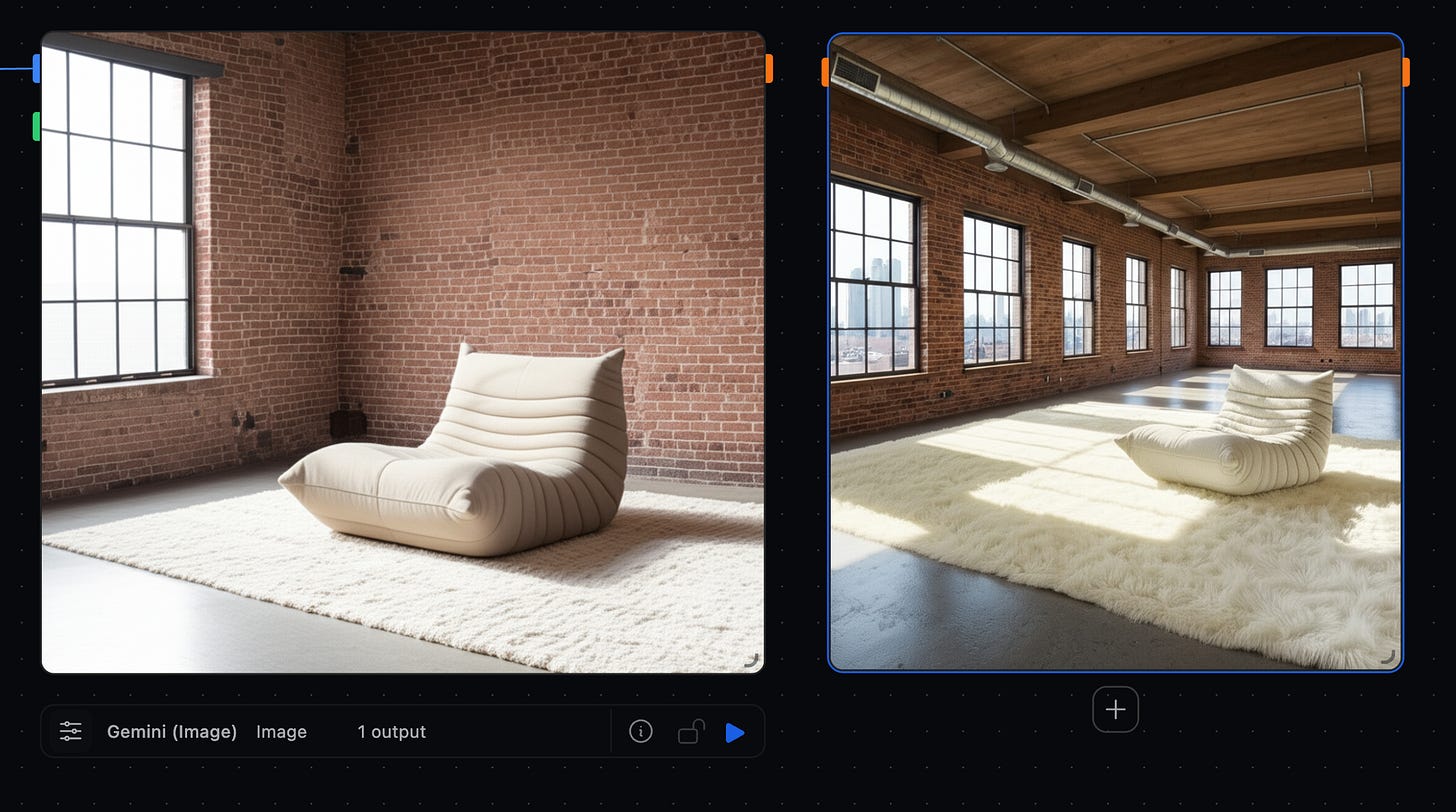

The image above shows a workflow where we first remove the background from an subject image and then use a custom node to place it over a background image. We can move and scale the subject however we want in our custom node, and output the composited result. This gives you much more control over the generated output:

Left: providing the subject and background image separately to Gemini. Right: providing the composite image to Gemini. Both images are generated with the same prompt. The composite keeps our space and subject consistent while adding realistic details.

Image Masking

Drawing image masks is another key use case for generated nodes. We need image masks for inpainting models, and I already spoke at length about image masking in the previous post. Our new generative node UI eliminates the need to extract the mask from the node via the clipboard, and makes it much easier to vibe-code a decent UI due to the constraint of a single react component with tailwind.

Creating a tool for adjusting brightness and saturation in 1 minute

Image Editing

Up until now it is expected that people might save images generated in Runchat and make further edits to them in software like Lightroom or Photoshop. However if these tasks are relatively simple (e.g. adjusting levels) then we might as well have a node to do this directly within Runchat. Because these features are relatively common they are also really easy to one-shot using language models. We can then refine the UI of the component through iterative prompting.

Video Composition

Its very easy to generate short videos in Runchat using models like LTX. The latest version of LTX can generate videos up to one minute long, and so the need for tools to cut up these clips and composite them together is getting real. It would be nice to be able to quickly splice several short videos together without needing to download and bring them into something like Clipchamp or Premiere. Language models are surprisingly good at generating UI for things like clips on timelines, and it is easy to create metadata that describes the start and end points of clips and where they are located on the timeline so that we could subsequently composite them together. This brings us to some of the challenges.

Challenges

If we then want to actually export a video, how would we handle this? I suspect that the best approach would be to export a JSON object from our video compositor tool and then use a separate node to actually do the compositing with something like ffmpeg wasm in a separate node. But this requires a decent amount of foresight and expertise on behalf of the person creating the custom nodes and would likely break flow. Even if we did manage to generate a video, how would we store it?

Elsewhere in Runchat we always return urls to generated assets instead of the raw data to prevent file sizes getting too large. This is practical because assets are always either uploaded by the user manually or returned as urls from third party services. However when we make changes to images or videos in the browser, we might be making changes constantly and it isn’t practical to upload all of these. This is just one of many challenges to implementing custom components:

How do we save data generated on the client?

How do we save state generated on the client so that changes can be reloaded?

How do we combine real-time UI in the browser with tasks that are better run on the server? Do we need to extend our postMessage api to allow calling Runchat nodes as tools?

Should we regenerate the component UI with ever code edit change? Or only when changes are saved?

How can we enforce consistent styling?

These challenges present a lot of exciting things to work through over the next few months. For now, we’ve made it possible for people to explore and share custom nodes that fill in gaps in Runchat’s functionality, especially for design.

If you’ve made it this far, try creating your own custom nodes in runchat.app and letting us know how you get on.