Look, we solved creative writing! (not yet)

As tools like OpenAI or Google’s Deep Research become more reliable they are likely to impact a lot of knowledge work. My presumption about how Deep Research works is this: a bot takes a request from a user, decides on a set of search terms that might yield a suitable response, searches these terms and checks if a suitable response can be found, then iteratively repeats this process to fill in blanks or refine assumptions based on data gleaned from previously clicked links. Clicking the top link in Google is a is a fickle thing that isn’t always guaranteed to provide the information you’re looking for, and language models often need to iterate through multiple search queries or click on multiple links until they find what what they need.

Visual Research

Runchat comes from a lineage of visual programming tools that replace functions written in code with a graphical user interface. Each function becomes a node on a canvas and how data is passed from one function to another is represented as a coloured line between nodes. The graphical user interface makes it easy to swap out one function with another, or to edit the inputs or outputs to a function. What if we could use this same user interface to create a visual mapping of language model research? We could see:

A high-level overview of what websites the language model searched

The data collected from each website

How the language model decided to proceed based on this data

How the data informed the models final response to the query

What’s more, we could make the currently black-boxed research process editable and reusable:

We could see when obvious sources were missing from the research

We could add additional prompts to help identify missing sources

We could change the depth of the search for certain domains and so on.

This seems like it would be a pretty useful tool. It would be especially useful to see this graph being built in real-time as language model makes assumptions about search terms and collects results, and to be able to interrupt this process at any point. Unfortunately there are a few barriers preventing us from easily building this right now in Runchat. These are:

Prompt nodes can’t currently manipulate the canvas

Runchats always run only once, nothing runs in a loop without user input

To explore the idea of Visual Research, we will first need to implement smarter loops in Runchat.

Loops vs Parallel Execution

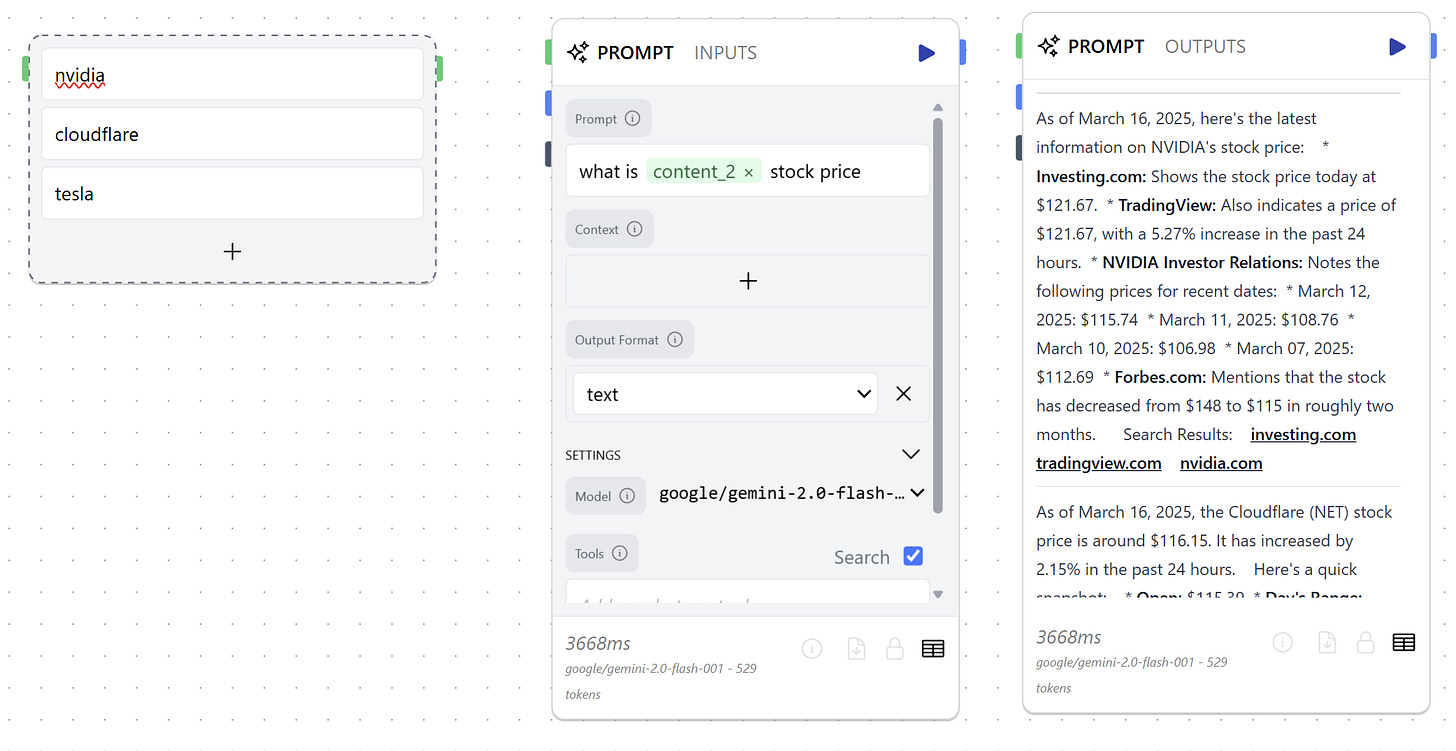

Runchat already supports parallel execution, and if we connect a list of search terms to the prompt input of a prompt node and enable search we can already iterate over these inputs and generate a result for each.

Searching in parallel

In some aspects of our visual research application parallel execution will be sufficient. For instance, our language model might create a list of search terms that we loop through and collect the top 10 search results. Then we might want to loop through these as well and retrieve the page HTML. However, at some point we will want to run a loop for an undetermined amount of time: we will need to run it until we find what we’re looking for or until we give up. This is the new feature that we need to build.

Runchats running Runchats

The saying goes that most “agentic” AI apps are just language models in a for loop, but really they are language models in a “while” loop. In order for our Runchat to continue searching until some condition is met (AKA research), we need a way for our Runchat to re-run itself.

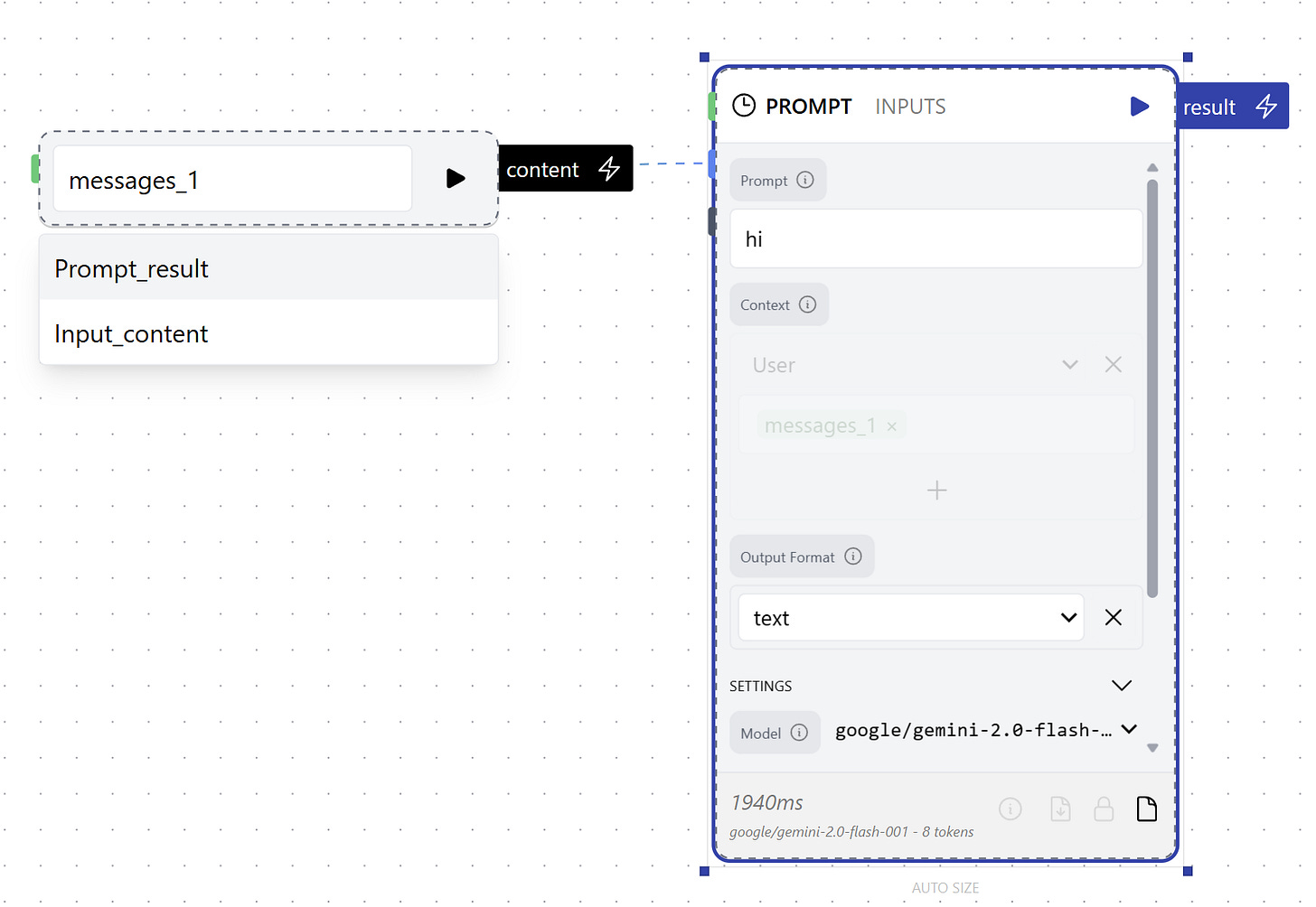

Referencing a downstream parameter with the Loop input node

Generally speaking visual programming tools avoid allowing you to pass data “upstream” without some additional configuration to prevent infinite loops that will crash your laptop. Runchat allows for passing data upstream using either template strings or the Loop input node, but we don’t have to worry about infinite loops because all nodes in a Runchat can only ever be triggered once per run. Runchat checks for edges that would create cycles and prevents these edges from being created, such as if someone tried to connect the output of a node to itself:

Turtles all the way down: this is how you crash a computer

However, we now want to support exactly this use case. We want a prompt node to be able to check it’s own context to make sure it doesn’t use the same search term twice, or we want it to know which links it has already clicked on and so on. In order to allow a language model run in a loop in Runchat we also need to allow users to create these loops and this presents a lot of potential problems:

Someone might decide to run a computationally intensive loop for a long time… $$$

Someone might decide to run an expensive prompt in a loop for a long time… $$$

Someone might decide to run a loop with a long fetch operation for a long time, effectively rendering runchat unusable in the meantime

Someone might use a node that has a loop in it, and end up in one of the above situations without knowing

These are just a few of the reasons we haven’t implemented loops already, but in order to explore the Visual Research idea we’re adding them with a couple of hard limitations that will be removed as we learn more about how people are using these tools:

Loops can only run for a max of 10 iterations

Loops can be interrupted (at least in the editor)

Implementing Loops

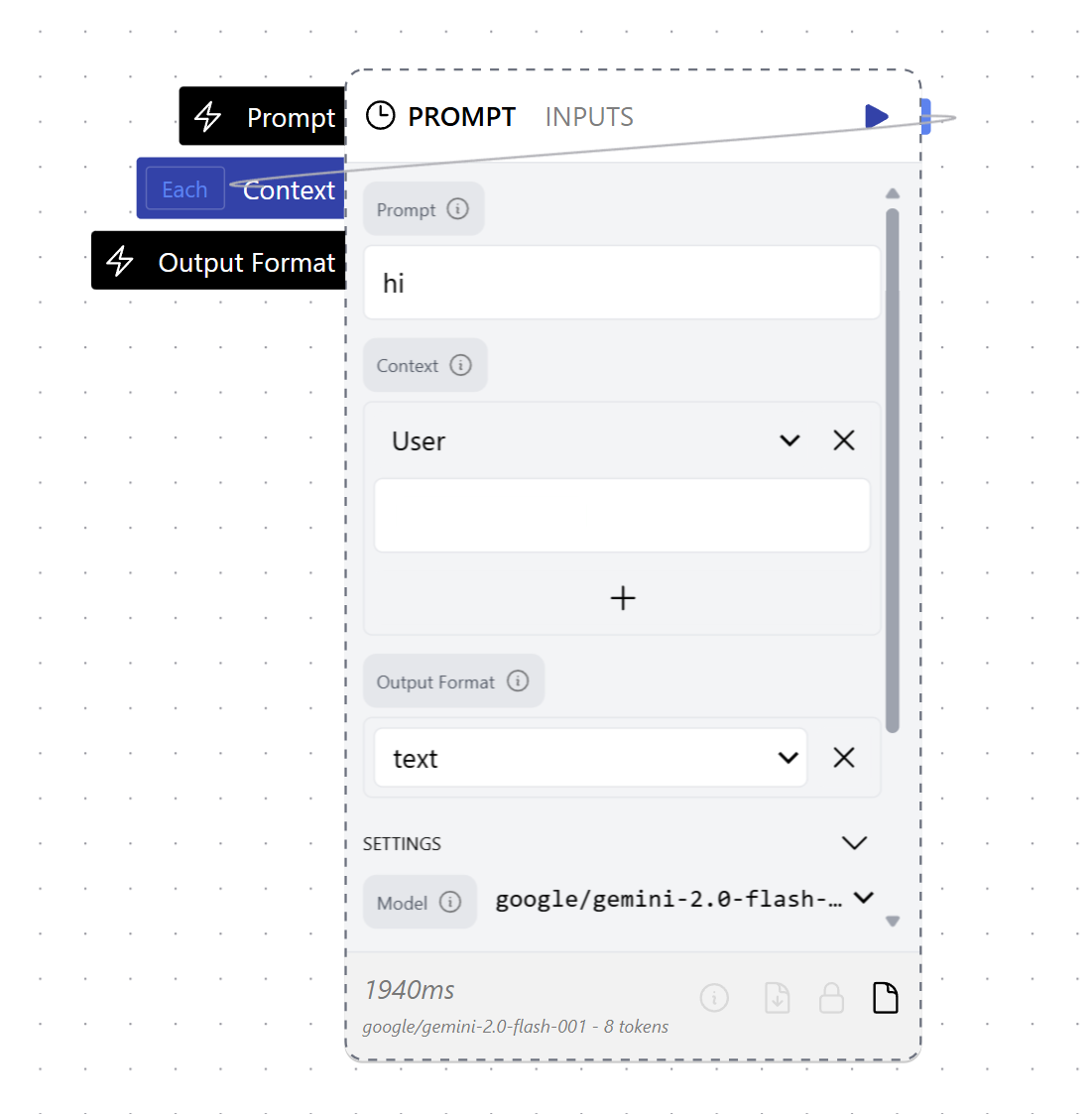

To implement loops we need to differentiate between normal edges that pass data from one node to another in Runchat, and special loop edges that not only pass data but also store conditions (current and max iterations, while conditions) and trigger re-runs of groups of nodes contained within the loop.

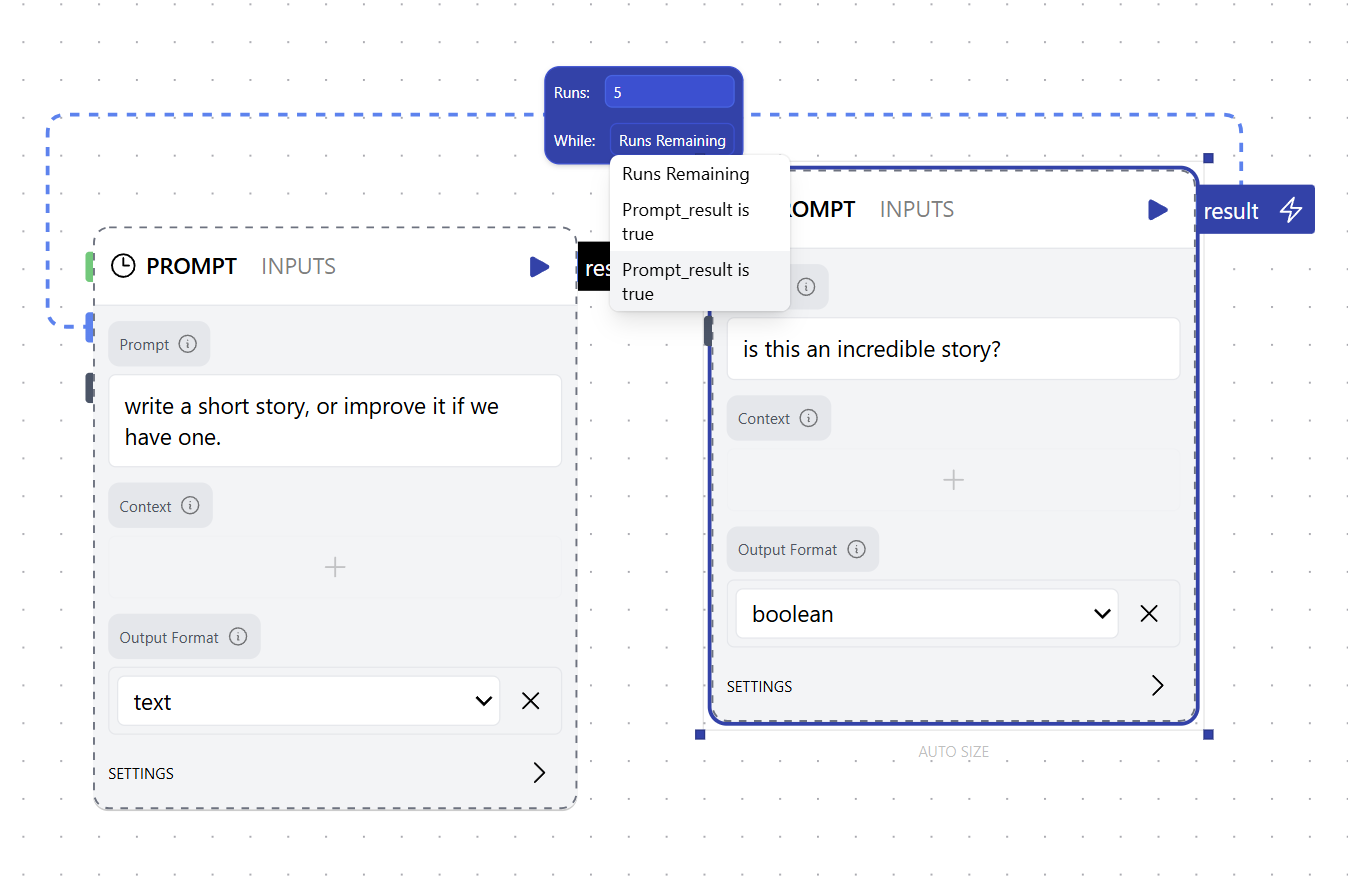

Loop edges are differentiated from normal edges by being drawn ‘around’ the nodes.

We can edit the properties of the loop edge by clicking a tag in the middle of it. This lets us set the maximum iterations for the loop to run as well as optionally reference an output parameter as a “while” condition. When we set the output parameter Runchat checks to see if this parameter can be evaluated as true and will terminate the loop if not.

Infinite stories

We also want to support loops within loops, since a lot of the time we might have a parent “control loop” and many intermediate sub-task loops. In runchat there are two patterns for this:

We have a runchat node in our loop, and this runchat contains a loop

We have a loop edge that connects two nodes within a group defined by another loop edge

To support nested loops, we need to reset the iteration counters for loop edges that are contained within an outer loop. We can do this by recursively calling our run function on groups of nodes defined by a loop edge, and checking for loop edges with sources and target nodes that also exist in the group.

Maths with nested loops

Where to from here

Now that we support conditionally triggering runchats we can start building more powerful automations that can run until certain goals or conditions are met. In addition to building out the features to visually chart research processes by language models, we’re excited to explore how running tools with language models might similarly be presented visually using nodes and loops. This should make tool calling workflows more easy to understand and edit. These tool calling workflows could be saved as runchats and re-used, and this pattern should allow prompt nodes to build runchats to solve user-defined tasks autonomously.